If you are running UCS hardware with ESXi then you should be using custom ENIC/FNIC drivers as specified on the Hardware and Software Interoperability Matrix.…

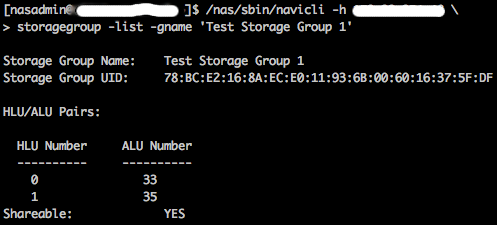

ESXi LUN ID Maximum

The VMware Configuration Maximums document is something I reference quite often. One configuration maximum that became relevant for me this week was under ESXi Host…

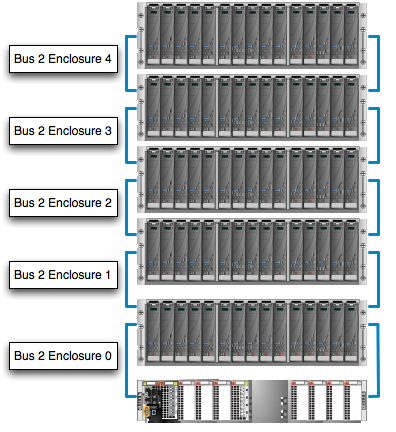

EMC storage, DAE failures, and vertical striping

EMC’s best practice for creating storage pools and RAID groups on mid to low end storage arrays (e.g. CLARiiON or VNX) has always been to…

Creating a LVM filesystem

This post is more for more reference, but hopefully someone else finds it useful as well. There are several posts online about LVM, but the…