As you have probably heard by now NSX took the spotlight at VMworld US 2013. Since the announcement, I have had several people approach asking what NSX means given the vCNS product. As such, I figured I would provide my thoughts on the history and future of VMware networking.

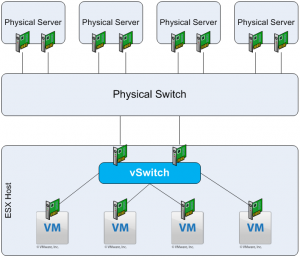

vSwitch

VMware started with a vSwitch in ESX. The vSwitch was a simple concept to grasp as the configuration and functionality of the feature was rather simple. It has been and still commonly is the case where systems and networking teams are separate. The VMware infrastructure typically belongs to the systems team and the systems team, especially prior to VMware, did not need to know much about the network. VMware, and virtualization in general, started the evolution of the network. The vSwitch was the first step, requiring system administrators to know a little bit more about the network.

With a relatively small environment, the vSwitch was great. As the infrastructure began to grow, it became clear that the vSwitch was not going to be able to solve datacenter networking of the future. This is apparent immediately from a management perspective. More infrastructure meant more vSwitches and more vSwitches meant more opportunity for human error. This problem is common throughout any environment. The solution is automation and central control. During the prime of the vSwitch, automation meant mostly scripts and central control did not really exist. What was needed was an abstraction layer and a configuration management tool.

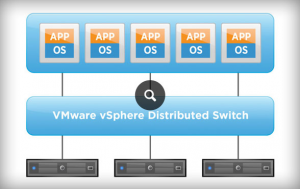

vNetwork Distributed Switch (VDS)

To address limitations in the vSwitch architecture, the VDS was created. The VDS provides an abstraction level and a central configuration management point for virtual switches in a VMware environment. With this technology, it was also possible to introduce more advanced networking options, further requiring network knowledge in the VMware stack.

Initially, the VDS had several limitations, but since its introduction those limitations have been addressed. Now, the VDS is ready for prime-time and VMware made this clear when they stated that all new features would be added to the VDS only. This meant that the vSwitch era was coming to an end. While the VDS is nice, it still does not address the networking needs of a cloud datacenter. It lacks the L2-L7 application layer knowledge and does not address the complex network topologies of the datacenter.

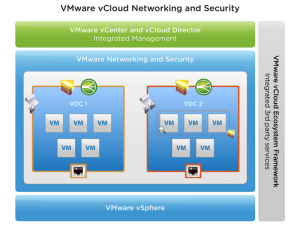

vCloud Network and Security (vCNS)

The next logical progression was to handle routing, firewalling, and load balancing. To do this, VMware introduced vCNS. vCNS was critical to the vCloud Director suite and the ability to address cloud needs at scale. Similar to VDS, vCNS was introduced with several limitations. Much work has gone into vCNS and it is now the de facto standard for VMware vCloud networking.

vCNS goes beyond VLANs and NIC teaming. vCNS allows for more advanced networking to become virtual, bringing knowledge closer to the source and destination of network packets. vCNS also opening the door to addressing networking limitations like address space in IPv4 and security concerns between systems and network teams using overlay and tunneling technologies. With the ability to route, tunnel, and firewall workloads virtually, complete network automation and true network scale-out became a possibilities.

Nicira (NVP)

Then along came OpenStack. OpenStack looked to revolutionize cloud infrastructure by making networking technology virtual, open-source, and completely programmable. OpenStack was divided into compute, network, and storage focuses and Nicira owned the networking component. VMware saw the opportunity and purchased Nicira and their NVP technology. OpenStack was interested in addressing the datacenter needs of today and the future. This meant software-defined everything. It also meant separating the control plane from the data plane throughout the stack and allowing potentially unlimited scale-out for any environment.

NSX

With the acquisition of Nicira, VMware began investing in OpenStack through the NVP product, but also took the opportunity to extend the product to meet the needs of non-OpenStack environments as well. NSX is considered the future of virtual networking. NSX looks to provide a pluggable architecture that is designed for the cloud.

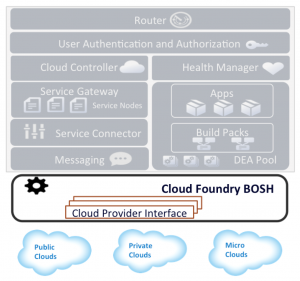

Coming from Cloud Foundry, I see NSX similar to BOSH. BOSH is the orchestration engine for Cloud Foundry. BOSH is a distributed system that administrates a Cloud Foundry instance. What makes BOSH powerful is that it has the ability to interface with multiple cloud environments. BOSH has a pluggable architecture provided by what are referred to as Cloud Provider Interfaces (CPIs). In short, a CPI provides knowledge of the minimum commands necessary to integrate with a cloud infrastructure and BOSH handles the rest. In visual form it looks something like this:

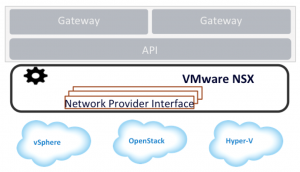

I see NSX in a similar fashion. NSX orchestrates the working layer, but requires integration with the underlying infrastructure to do so. During VMworld, NSX for vSphere was announced. vSphere would represent one of the integrations possible. In a minimalistic fashion, NSX would look visually something like this:

NSX for vSphere

NSX for vSphere is what was announced at VMworld US 2013. Its goal is to provide true software-defined networking and remove all dependencies from the physical layer. Now what does this mean for vCNS? Well, you can think of NSX for vSphere as vCNS.next. NSX for vSphere is really a combination of components, concepts, and technology from both vCNS and NVP. To be clear, both products will be supported in parallel similar to vSwitch and VDS, but vCNS 5.1 will be end-of-life on September 30. For more information, see http://kb.vmware.com/kb/2055410.

I hope this clears up the different networking components in the VMware stack, where they all fit and what the future has in store!

© 2013 – 2021, Steve Flanders. All rights reserved.