I was recently deploying a Cloud Foundry instance and was experiencing errors during the deployment. From the several failed deployments, I received the following BOSH…

Hung Server/VMs post ESXi 5.0 upgrade

I have a home lab running vSphere on some PowerEdge T110 servers. My environment was running 4.1, but I recently (6 months ago!) decided to…

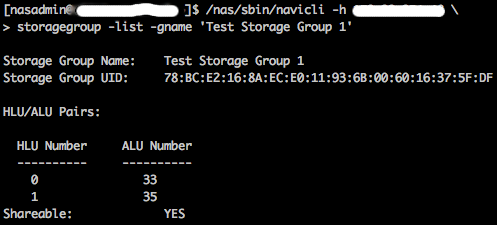

ESXi LUN ID Maximum

The VMware Configuration Maximums document is something I reference quite often. One configuration maximum that became relevant for me this week was under ESXi Host…

A general system error occurred internal error vmodl.fault.HostCommunication

I am in the process of building my home lab. I recently purchased two servers and installed ESXi 4.1 on them. In addition, I deployed…

Configuring syslog on ESXi

I was assigned an interesting problem a few weeks back. A customer had requested that all ESXi servers have syslog configured in order to troubleshoot…

A general system error occurred: internal error

I recently tried to export the system logs from an ESXi via the vSphere client. Instead of receiving the generated bundled the host returned: A…

An error occurred during host configuration

When creating an NFS datastore on an ESXi host the other day, I received the following error message: An error occurred during host configuration. If…

Permanently enabling SSH on ESXi via PowerShell

As you all know by now, ESXi comes with SSH, which VMware now refers to as Tech Support Mode, disabled. The reasons behind this include…